Understanding CPU Microarchitecture and Its Impact on Performance

Understanding CPU Microarchitecture and Its Impact on Performance

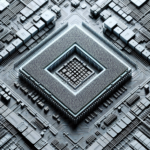

Central Processing Units (CPUs) are the heart of modern computing devices, driving everything from personal computers to servers and mobile devices. The performance of a CPU is not solely determined by its clock speed or the number of cores it has; the underlying microarchitecture plays a crucial role. This article delves into the intricacies of CPU microarchitecture and explores how it impacts overall performance.

What is CPU Microarchitecture?

CPU microarchitecture refers to the design and organization of the various components within a CPU. It encompasses the layout of the execution units, cache hierarchy, pipeline stages, and other critical elements that determine how efficiently a CPU can execute instructions. While the instruction set architecture (ISA) defines the set of instructions a CPU can execute, the microarchitecture dictates how these instructions are implemented and processed.

Key Components of CPU Microarchitecture

To understand CPU microarchitecture, it is essential to familiarize oneself with its key components:

- Execution Units: These are the parts of the CPU that perform arithmetic and logical operations. They include Arithmetic Logic Units (ALUs), Floating Point Units (FPUs), and other specialized units.

- Pipeline: The pipeline is a series of stages through which instructions pass during execution. Each stage performs a part of the instruction, allowing multiple instructions to be processed simultaneously.

- Cache Hierarchy: Caches are small, fast memory units located close to the CPU cores. They store frequently accessed data to reduce latency. The cache hierarchy typically includes L1, L2, and L3 caches.

- Branch Predictors: These components predict the direction of branch instructions to minimize pipeline stalls and improve execution flow.

- Out-of-Order Execution: This technique allows the CPU to execute instructions out of their original order to optimize resource utilization and reduce idle time.

- Instruction Decoders: These units translate complex instructions into simpler micro-operations that the CPU can execute more efficiently.

Impact of Microarchitecture on Performance

The microarchitecture of a CPU significantly influences its performance. Here are some key aspects where microarchitecture impacts performance:

Instruction Throughput

The design of the pipeline and the efficiency of execution units determine the instruction throughput, which is the number of instructions a CPU can execute per clock cycle. A well-designed pipeline with multiple stages can process several instructions simultaneously, increasing throughput and overall performance.

Latency

Latency refers to the time it takes for an instruction to pass through the pipeline from start to finish. Microarchitectural features like branch prediction and out-of-order execution can reduce latency by minimizing pipeline stalls and ensuring that execution units are utilized efficiently.

Cache Performance

The cache hierarchy plays a crucial role in reducing memory access latency. A well-designed cache system with efficient algorithms for data placement and replacement can significantly improve performance by reducing the time the CPU spends waiting for data from main memory.

Power Efficiency

Microarchitecture also impacts the power efficiency of a CPU. Techniques like dynamic voltage and frequency scaling (DVFS) and power gating can help manage power consumption without compromising performance. Efficient microarchitectural designs can achieve a balance between high performance and low power consumption.

Scalability

Modern CPUs often feature multiple cores to handle parallel workloads. The microarchitecture determines how well these cores can communicate and share resources. Efficient inter-core communication and resource sharing are essential for scaling performance across multiple cores.

Evolution of CPU Microarchitecture

CPU microarchitecture has evolved significantly over the years, driven by the need for higher performance and efficiency. Here are some notable milestones in the evolution of CPU microarchitecture:

Early Microarchitectures

Early CPUs like the Intel 4004 and 8086 had simple microarchitectures with limited parallelism and basic execution units. These CPUs were designed for specific tasks and had relatively low performance compared to modern standards.

Superscalar Architectures

The introduction of superscalar architectures marked a significant leap in CPU performance. Superscalar CPUs can execute multiple instructions per clock cycle by incorporating multiple execution units and parallel pipelines. The Intel Pentium and AMD K5 are examples of early superscalar CPUs.

Out-of-Order Execution

Out-of-order execution (OoOE) is a technique that allows CPUs to execute instructions out of their original order to optimize resource utilization. This approach minimizes idle time and improves performance. The Intel Pentium Pro and AMD Athlon were among the first CPUs to implement OoOE.

Multi-Core Architectures

The shift to multi-core architectures was driven by the limitations of increasing clock speeds. Multi-core CPUs feature multiple processing cores on a single chip, enabling parallel execution of tasks. This approach improves performance for multi-threaded applications and enhances overall system responsiveness.

Advanced Microarchitectures

Modern CPUs feature advanced microarchitectures with sophisticated techniques like simultaneous multithreading (SMT), advanced branch prediction, and large cache hierarchies. Examples include Intel’s Core and AMD’s Ryzen architectures, which deliver high performance and efficiency for a wide range of applications.

Case Studies: Microarchitecture in Action

To illustrate the impact of microarchitecture on performance, let’s examine two case studies: Intel’s Skylake and AMD’s Zen architectures.

Intel Skylake

Intel’s Skylake microarchitecture introduced several enhancements over its predecessor, Broadwell. Key improvements included:

- Improved Pipeline: Skylake featured a more efficient pipeline with better branch prediction and out-of-order execution capabilities.

- Larger Caches: Skylake increased the size of L1 and L2 caches, reducing memory access latency and improving performance.

- Enhanced Power Efficiency: Skylake incorporated advanced power management techniques, enabling better performance-per-watt.

These enhancements resulted in significant performance gains for both single-threaded and multi-threaded applications, making Skylake a popular choice for a wide range of computing tasks.

AMD Zen

AMD’s Zen microarchitecture marked a major comeback for the company in the CPU market. Key features of Zen included:

- Simultaneous Multithreading (SMT): Zen introduced SMT, allowing each core to execute two threads simultaneously, improving multi-threaded performance.

- Efficient Cache Hierarchy: Zen featured a well-designed cache hierarchy with large L3 caches, reducing memory access latency.

- High IPC (Instructions Per Cycle): Zen focused on improving IPC, resulting in better single-threaded performance.

Zen’s innovative design and competitive performance made it a strong contender in the CPU market, challenging Intel’s dominance and offering consumers more choices.

FAQ

What is the difference between microarchitecture and instruction set architecture (ISA)?

The instruction set architecture (ISA) defines the set of instructions a CPU can execute, while the microarchitecture determines how these instructions are implemented and processed within the CPU. In other words, ISA is the “what” and microarchitecture is the “how.”

How does cache size impact CPU performance?

Larger caches can store more frequently accessed data, reducing the time the CPU spends waiting for data from main memory. This reduces memory access latency and improves overall performance, especially for data-intensive applications.

What is out-of-order execution, and why is it important?

Out-of-order execution (OoOE) allows the CPU to execute instructions out of their original order to optimize resource utilization and minimize idle time. This technique improves performance by ensuring that execution units are always busy, reducing pipeline stalls.

How does branch prediction work?

Branch prediction is a technique used to guess the direction of branch instructions (e.g., if-else statements) before they are executed. Accurate branch prediction minimizes pipeline stalls by ensuring that the CPU continues executing instructions without waiting for the branch outcome.

What is simultaneous multithreading (SMT)?

Simultaneous multithreading (SMT) allows each CPU core to execute multiple threads simultaneously. This improves multi-threaded performance by better utilizing the CPU’s resources and increasing instruction throughput.

Conclusion

Understanding CPU microarchitecture is essential for appreciating the complexities of modern computing. The design and organization of a CPU’s components significantly impact its performance, efficiency, and scalability. As technology continues to evolve, advancements in microarchitecture will play a crucial role in driving the next generation of high-performance computing devices. Whether you are a tech enthusiast, a software developer, or a hardware engineer, a deep understanding of CPU microarchitecture will empower you to make informed decisions and optimize your computing experience.