How CPUs Handle Large-Scale Simulations

Introduction

Central Processing Units (CPUs) are the heart of modern computing, responsible for executing instructions and performing calculations that drive applications and systems. One of the most demanding tasks for CPUs is handling large-scale simulations, which are essential in fields such as climate modeling, astrophysics, financial forecasting, and engineering. This article delves into how CPUs manage these complex simulations, exploring the architecture, techniques, and challenges involved.

Understanding Large-Scale Simulations

What Are Large-Scale Simulations?

Large-scale simulations are computational models that replicate real-world processes or systems over extended periods and vast spatial domains. These simulations require immense computational power and memory to handle the intricate calculations and data involved. Examples include weather prediction models, molecular dynamics simulations, and fluid dynamics simulations.

Importance of Large-Scale Simulations

Large-scale simulations are crucial for advancing scientific research, engineering design, and decision-making processes. They allow researchers to test hypotheses, predict outcomes, and optimize systems without the need for costly and time-consuming physical experiments. For instance, climate models help predict future weather patterns, while engineering simulations aid in designing safer and more efficient structures.

CPU Architecture and Its Role in Simulations

Core Components of a CPU

To understand how CPUs handle large-scale simulations, it’s essential to grasp the core components of a CPU:

- Arithmetic Logic Unit (ALU): Performs arithmetic and logical operations.

- Control Unit (CU): Directs the operation of the processor by fetching, decoding, and executing instructions.

- Registers: Small, fast storage locations within the CPU used to hold data and instructions temporarily.

- Cache: A smaller, faster type of volatile memory that provides high-speed data access to the CPU.

- Clock: Synchronizes the operations of the CPU by generating a regular sequence of pulses.

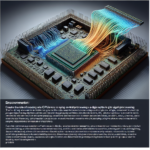

Parallel Processing

One of the key techniques CPUs use to handle large-scale simulations is parallel processing. This involves dividing a large problem into smaller sub-problems that can be solved simultaneously. Modern CPUs are equipped with multiple cores, each capable of executing instructions independently. By leveraging multi-core architectures, CPUs can perform parallel processing, significantly speeding up simulations.

Vector Processing

Vector processing is another technique used to enhance the performance of large-scale simulations. It involves performing the same operation on multiple data points simultaneously. CPUs with vector processing capabilities, such as those with Advanced Vector Extensions (AVX), can handle large datasets more efficiently by processing multiple elements in a single instruction cycle.

Techniques for Optimizing CPU Performance in Simulations

Load Balancing

Load balancing is crucial for optimizing CPU performance in large-scale simulations. It involves distributing the computational workload evenly across all available CPU cores to prevent any single core from becoming a bottleneck. Effective load balancing ensures that all cores are utilized efficiently, maximizing overall performance.

Memory Management

Efficient memory management is vital for handling large-scale simulations. CPUs rely on a hierarchy of memory, including registers, cache, and main memory, to store and access data. Optimizing memory usage involves minimizing data movement between different levels of the memory hierarchy and ensuring that frequently accessed data is stored in faster memory locations.

Algorithm Optimization

Optimizing the algorithms used in simulations can significantly enhance CPU performance. This involves selecting the most efficient algorithms for the specific problem at hand and fine-tuning them to minimize computational complexity. Techniques such as loop unrolling, inlining, and vectorization can help reduce the number of instructions executed by the CPU, speeding up simulations.

Challenges in Handling Large-Scale Simulations

Scalability

One of the primary challenges in handling large-scale simulations is scalability. As the size and complexity of simulations increase, the computational requirements grow exponentially. Ensuring that the CPU can scale efficiently to handle larger simulations without significant performance degradation is a critical challenge.

Data Management

Large-scale simulations generate vast amounts of data that need to be stored, processed, and analyzed. Managing this data efficiently is a significant challenge, requiring effective data storage solutions, high-speed data transfer mechanisms, and robust data analysis tools.

Energy Consumption

Handling large-scale simulations requires substantial computational power, which in turn leads to high energy consumption. Optimizing energy efficiency while maintaining performance is a critical challenge, especially in large data centers and high-performance computing environments.

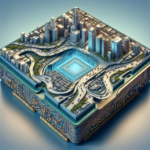

Future Trends in CPU Design for Simulations

Heterogeneous Computing

Heterogeneous computing involves using different types of processors, such as CPUs, Graphics Processing Units (GPUs), and specialized accelerators, to handle different aspects of a simulation. This approach can significantly enhance performance by leveraging the strengths of each processor type for specific tasks.

Quantum Computing

Quantum computing holds the potential to revolutionize large-scale simulations by providing unprecedented computational power. While still in its early stages, quantum computing could eventually handle complex simulations that are currently beyond the reach of classical CPUs.

Neuromorphic Computing

Neuromorphic computing is an emerging field that aims to mimic the structure and function of the human brain. By designing processors that operate more like neural networks, researchers hope to achieve significant improvements in computational efficiency and performance for large-scale simulations.

FAQ

What is the role of a CPU in large-scale simulations?

The CPU is responsible for executing the instructions and performing the calculations required for large-scale simulations. It handles tasks such as data processing, memory management, and parallel processing to ensure that simulations run efficiently and accurately.

How do CPUs handle parallel processing in simulations?

CPUs handle parallel processing by dividing a large problem into smaller sub-problems that can be solved simultaneously. Modern CPUs are equipped with multiple cores, each capable of executing instructions independently. By leveraging multi-core architectures, CPUs can perform parallel processing, significantly speeding up simulations.

What are the main challenges in handling large-scale simulations?

The main challenges in handling large-scale simulations include scalability, data management, and energy consumption. Ensuring that the CPU can scale efficiently to handle larger simulations, managing the vast amounts of data generated, and optimizing energy efficiency while maintaining performance are critical challenges.

What future trends are expected in CPU design for simulations?

Future trends in CPU design for simulations include heterogeneous computing, quantum computing, and neuromorphic computing. These approaches aim to enhance performance by leveraging different types of processors, providing unprecedented computational power, and mimicking the structure and function of the human brain.

Conclusion

CPUs play a crucial role in handling large-scale simulations, enabling researchers and engineers to model complex systems and processes with high accuracy and efficiency. By leveraging techniques such as parallel processing, vector processing, and algorithm optimization, CPUs can manage the immense computational demands of these simulations. However, challenges such as scalability, data management, and energy consumption remain significant. Future trends in CPU design, including heterogeneous computing, quantum computing, and neuromorphic computing, hold the potential to further enhance the performance and capabilities of CPUs in handling large-scale simulations.